I Spent 30 Days on ONE AI Prompt: 12,000 Reviews Later

I spent a full month on one AI prompt. Thirty days. One prompt.

Everyone else was collecting prompt libraries and sharing “the ultimate ChatGPT cheat sheet” and I was sitting there tweaking the same 200 words over and over.

I thought I was being stupid.

I thought I was missing something everyone else understood…

Turns out I was accidentally discovering something that would change how I work forever. I just didn’t have a name for it yet.

Now I call it the Intelligence Merge.

And that single prompt I obsessed over for a month?

It’s handled over 12,000 customer reviews in the 24 months since.

Still running. Still accurate. Still sounding exactly like it should.

Here’s how it happened.

The Problem: 500 Reviews and Zero Time

It was January 2023 right after ChatGPT launched.

I had a client, a restaurant with multiple locations, sitting on 500+ Google reviews that needed responses.

And I don’t mean generic “thanks for your feedback” copy and paste jobs.

Every single one needed to sound personal. Authentic.

Like the owner actually read it and cared.

I did the math on doing it manually. At least a week of work. Maybe longer.

The reviews were piling up.

The client was depending on me.

And I had no idea how I was going to make this work.

Have you ever stared at a task that felt completely impossible? Not hard. Impossible.

That was me.

Drowning in five-star praise and one-star complaints, knowing each one deserved a real response, and knowing I couldn’t physically do it.

The Failed Solution: Hiring Help

So I hired a VA.

Spent two hours on Zoom training him.

We worked out a whole system together.

He’d custom-write well-thought-out draft responses and make them fun and personalized.

We did one during the call and it worked great.

Then reality hit.

After four or five days, he’d done maybe 30 reviews.

He started going generic. Doing ten a day and calling it quits.

I don’t know if he got bored or picked up another job on the side, but the bottom line was clear: this wasn’t going to work for me.

I actually tried another VA on a different project around the same time.

Asked him to mock up a simple landing page. He’d do one section, then go completely off in a random direction.

When I asked why, he said, “I thought that’s what you wanted.”

Here’s what I learned from both experiences:

Training is required either way.

Whether you’re training a human or training an AI, you’re going to spend hours teaching them your business, your voice, your standards.

The investment is similar.

The difference is what you get back (based on the task, of course).

AI doesn’t need days off. It doesn’t go off in random directions because it “thought that’s what you wanted.”

And it doesn’t take a side job and put your stuff on the back burner.

The training investment is similar. The consistency of output is not.

The $100 That Changed Everything

With the VA approach dead for this particular task, I found a guy online who would teach me how to automate review responses with AI.

I had never used AI before and this was totally new to me.

It cost me a $100 for a Zoom session.

- He showed me that Zapier could automatically listen for new Google reviews.

- He showed me the OpenAI API and how to connect it.

- I set it up in my own account while he walked me through it on his screen.

Then he gave me his prompt.

I formatted it for the restaurant.

And it failed pretty badly.

Before (What the AI Actually Produced):

“Hi Joe, We’re sorry to hear that your meal didn’t taste good. Please call us at 480-555-5555 or email us at [email protected]”

The AI inserted fake 555 phone numbers for no reason.

It made up email addresses that didn’t exist. It called customers by the wrong name.

It was a whole mess of random errors that would have embarrassed the client if any of them had gone live.

The guy who sold me the training said, “You’ll probably have to work on the prompt to get it good.”

So I did.

I just didn’t expect what “working on it” would actually mean.

30 Days. 12 Sessions. 1 Prompt.

I didn’t work on it every single day. That would be obsessive.

About three times a week, I’d sit down and run tests.

Twelve sessions total over roughly a month.

Each time I’d feed sample reviews through the system, look at what went wrong, and adjust.

- I added more context about the business.

- I refined the voice.

- I built in guardrails so it wouldn’t make up phone numbers or invent email addresses.

- I taught it what the restaurant would never say.

Slowly, the outputs got better.

And better.

And better.

Sound familiar?

That feeling of something almost working, but not quite?

That’s where I now see a lot of people quit.

They try AI, get garbage, and decide they’re “not good at prompts” or AI “doesn’t work for their industry.”

I was too stubborn to quit. I needed this to work.

The Test That Proved It Worked

I figured if I was going to trust this system, I needed to test it with the hardest case I could find.

Someone had left a massive bad review. Over 400 words of angry customer venting about a terrible experience at one of the locations.

The food was wrong. Service was slow. The manager was rude. The whole nightmare scenario.

I fed it into the system and waited.

If it could handle THAT, I’d know I nailed it.

After (What the AI Produced After 30 Days of Refinement):

“Hi Joe, We’re sorry to hear that your food didn’t taste good. We have made the managers aware of the issue. If you’d like to come back by the restaurant, show us this review and we will take care of you. We appreciate you taking the time to leave this feedback as it’s very important to us to make sure that every customer has a good and consistent experience. We take comments like this to heart and we want to make everything right.“

No fake phone numbers. No made-up emails. No wrong names.

Just a genuinely good response to a genuinely difficult review.

Note: Using an AI-driven online review response system is golden, especially for responding to one or two-star reviews where a lot of business owners either get mad or sad or don’t respond, which is in my opinion, is suicide because people looking at their reviews figure they don’t care if they get a bad review So how good is the food or service or product?

That’s when I knew. Not because it sounded like me. But because it handled the hardest case I could throw at it.

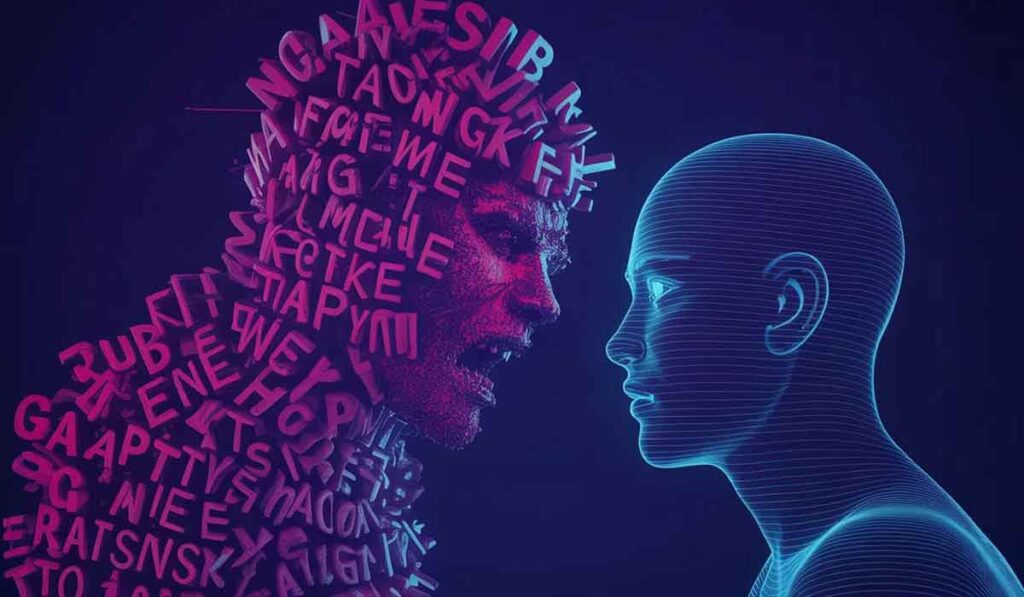

What I Was Actually Doing (The Intelligence Merge)

Looking back, I realize:

- I wasn’t just “fixing a prompt.”

- I wasn’t debugging code or tweaking settings.

- I was teaching the AI how the restaurant owner thinks.

- What matters to them.

- How they talk to customers.

- What they would never say.

- Their values.

- Their voice.

- Their way of handling problems.

I was extracting the restaurant’s intelligence and merging it with AI’s capabilities.

I just didn’t know that’s what it was called yet.

That month of tweaking?

Those twelve sessions of testing and refining?

That was my first Intelligence Merge.

I didn’t read about it in a course. I didn’t follow someone else’s framework.

I stumbled into it because I was stubborn enough to keep going when the generic approach failed.

What I was actually doing was building custom AI intelligence from real expertise.

- Not collecting prompts.

- Not copying templates.

- Not accepting “AI slop” like I’m afraid many people do.

- Extracting knowledge and teaching it to AI until the outputs felt right.

The Results: 12,000 Reviews and Counting

That one prompt I spent a month on? It’s still running.

Twenty-four months later. About 500 reviews per month on average.

That’s over 12,000 reviews handled by one Intelligence Merge.

Twelve sessions of work in January 2023.

- Still producing value today.

- Still accurate.

- Still on-brand.

The restaurant has added locations since then and I’ve massaged the prompt slightly, but it’s about 80% the same as when I finally got it right.

Every single client I’ve worked with since has some version of this approach.

Because the work I put in extracted something real.

Something that compounds over time instead of breaking every time a new AI tool comes out.

What This Means For You

Here’s why I’m telling you this story.

You’ve probably tried AI. Got generic garbage back. Thought you were doing something wrong.

You weren’t.

You were just using AI like everyone else uses it.

Like a search engine.

Ask a question, get an answer, paste it somewhere.

That’s what 95% of people do.

But there’s another way.

You can extract YOUR expertise. YOUR patterns. YOUR voice.

And merge it with AI’s capabilities.

That’s the Intelligence Merge.

And if I could figure it out by accident, tweaking one prompt for a month because I was too stubborn to quit, you can figure it out on purpose. Faster.

The question isn’t whether AI can help you.

The question is whether you’ll teach it how YOU think, or keep using it like everyone else.

Frequently Asked Questions

What is the Intelligence Merge?

The Intelligence Merge is the process of extracting your expertise, patterns, and voice, then teaching them to AI so the outputs sound like you instead of generic AI slop. It’s the difference between using AI as a search engine and using AI as an extension of your own thinking.

How is the Intelligence Merge different from prompt engineering?

Prompt engineering focuses on crafting clever instructions to get better outputs from AI. The Intelligence Merge focuses on teaching AI who you are first, then letting that knowledge shape every output. One is about technique. The other is about identity.

Do I need technical skills to do an Intelligence Merge?

No. I’m not a programmer. I paid someone $100 to show me how to connect Zapier to OpenAI. The technical setup was the easy part. The hard part was the thinking: figuring out what makes my client’s voice unique and how to teach that to AI.

How long does an Intelligence Merge take?

My first one took about a month of part-time work. Twelve sessions at roughly three times per week. Now that I understand the methodology, I can help people build their first Intelligence Merge much faster. The free foundation interview takes about 60 – 120 minutes and gets you 60-70% of the way there.

What kind of results can I expect?

That depends on what you’re building. My Google review system has handled 12,000 reviews over 24 months. Other clients have used Intelligence Merge for content creation, client communication, and internal processes. The common thread is that the outputs sound like them, not like AI.

Why not just use a prompt library?

Prompt libraries give you someone else’s thinking formatted for AI. That might work for generic tasks. But if you need outputs that sound like you, reflect your expertise, and match your standards, you need to teach AI your own intelligence. No prompt library can do that for you.

Can I do this with any AI tool?

The principles work across tools. I’ve built Intelligence Merges in OpenAI, Claude, and Gemini. The specific setup varies, but the core methodology stays the same: extract your expertise, teach it to AI, refine until the outputs feel right.

How do I know if it’s working?

You’ll know it’s working when you read an AI output and think, “That sounds like me on my best day.” When you can throw the hardest case at it and it responds the way you would. When the outputs stop feeling like AI and start feeling like an extension of your own thinking.

Ready to Build Your Own Intelligence Merge?

If you want to go deeper, I’ve built a free interview process that extracts your expertise in 60 – 120 minutes.

Forty-three questions designed to pull out the patterns, voice, and thinking that make you unique. It gets you 60-70% of the way to a full Intelligence Merge.

Click here if you want to try it.

But either way, the principle is this: stop collecting prompts. Start teaching AI how you think.

That’s the difference between the 95% who use AI tools and prove they’re replaceable, and the 5% who merge their intelligence with AI and become irreplaceable.

Build, don’t scroll.

Teaching the The Intelligence Merge Methodology.